[Update April 15, 2019] This blog post covers the 2018 course which you can find here.

(Copyright fast.ai)

(Copyright fast.ai)

Most approaches teaching machine and deep learning can be cumbersome and time-consuming: Stepping through all the details of (basic) linear algebra, activation functions and slowly building a neural network, so that after a few weeks/months, you are able to build an image classifier, if you have actually powered through…

Do not get me wrong, understanding the intuition and fundamentals of linear algebra and neural networks are an important and invaluable asset in achieving great results.

But…

Sometimes…

You just want to get the results, seeing what it can do on a human level, before delving through all the details.

And this is where fast.ai comes in.

Fast.ai’s unique approach

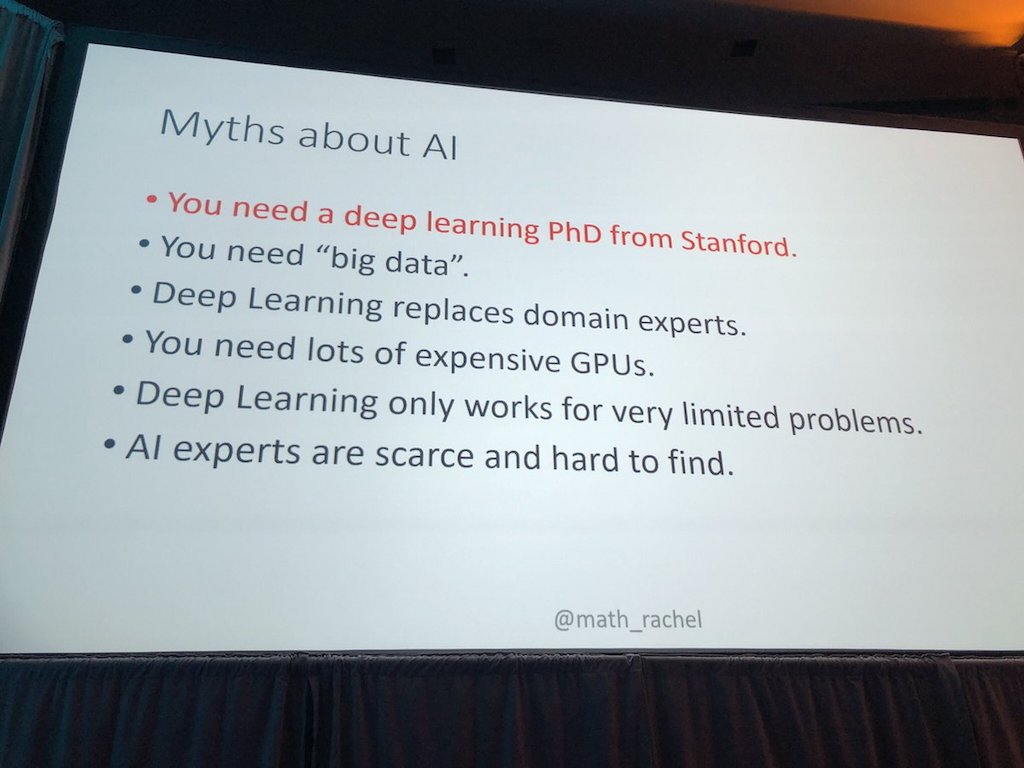

The Fast.ai course is a top-down approach to teaching you the basics as well as the latest and greatest in deep learning, starting from a simple 4-line classifier without having to worry too much about all the intricacies of such a solution, debunking AI-myths along the way:

(Slide credits to @math_rachel)

(Slide credits to @math_rachel)

Or as Jeremy Howard - founder of fast.ai - puts it in the introduction video:

Get you up and running with deep learning in practice,

with a coding first approach,

focussing on getting world-class results.

What I expected to learn

I came across fast.ai long before I actually started watching the videos. Before that, I wanted to and did complete courses such as Andrew Ng’s Machine Learning course and deeplearning.ai. Both pack an enourmous amount of machine and deep learning expertise, but require you to push through the very basics to distil all the value. Looking at the fast.ai course, I was intrigued by their premise of practicality.

First, I had not really set up an actual machine to train deep learning models. Previous courses where usually on Coursera, where you could train your models on their cloud infrastructure, without having to worry about deploying, training, updating, … Although I had dedicated a post on running those Coursera notebooks offline, mostly for future reference at that time.

Coming from the Coursera courses, I really had no idea how to enter a Kaggle competition to compete with other practitioners - both practically as well as in applying what I had learned. For those who have not yet heard of Kaggle, it is a place where data scientists can compete creating predictive models for datasets/challenges uploaded by companies or other users. It is a great place to hone your data science skills in general. I thought Fast.ai would help me to get started and improve my skills to compete, after all, Jeremy was the top ranked participant in data science competitions in both 2010 and 2011.

Additionally, I was triggered by the promise of “world-class results”. When starting the course, I was aware of what type of architectures were typically used in which situations, but was looking forward to get the latest and greatest techniques under my belt. (Note: deeplearning.ai also does a great job at this, but sticks more to the foundational stuff imho)

Lastly, having picked up some Keras and Tensorflow in previous courses, I was looking forward to producing great results with the fast.ai library, which is really at the heart of the course.

Observations after taking the course a first time

Full disclore, at the time of writing, I have:

- completed the part 1 video series;

- restarted part 1: reading all the papers and independently programming all the notebooks;

- progressed half-way through the part 2 video series.

Practical approach

There is absolutely no lie about the “practicality” aspect of the course. :)

In the first lesson you are guided through setting up your own training machine and presented with a number of options so you can find the one best for you (in alphabetical order: AWS, Azure, Crestle, GCE, Paperspace, your own box…).

From day one, you will also be building actionable, world-class models, starting with a high-level, abstracted solution and gradually digging deeper to the relevant details. The course is very hands-on and encourages you to write the code yourself. (more on that later)

Once you have completed the first part, you will also be encouraged to start reading through the scientific papers, while explaining they are not as scary as they appear to be.

Forum and community

Next to the videos and notebooks, fast.ai has an active forum and community where you can ask questions or join discussions on deep learning topics. From what I have seen so far, this is one of the more active forums, focussing on more than finishing concrete exercises in order to “pass” the course.

The fast.ai repository is in constant movement and gets updated on a regular (read almost daily) basis.

Less structured than other MOOCs

The videos are recordings of a course at The Data Instute at USF which means they are not as slick as most of the MOOCs you find online. The main difference I had to wrap my head around was the fact there was no

“course material => pop quiz => (graded) exercise”-cycle

which is very common in MOOCs. The videos and notebooks are the material and it is up to you to study your way through it, which forces you to focus on making sure you get all the takeaways from the each video.

Additionally, I found the videos are not something you pick up if you have a couple of minutes to spare. While other MOOCs require you to complete a set of (short) videos each week, it easier to take 10 minutes to watch through one of those videos, marking it as done but because Fast.ai uses a code/notebook approach, I had to sit down and take the time to complete a video, in order to build up the mental model to keep track of what was being explained.

The fast.ai library

Throughout the entire course the fast.ai library (fast.ai is both the name of the course and the open source library) is used to teach and create state-of-the-art results. The library is a high-level abstraction on top of PyTorch, shielding you from the lower-level building blocks and wrapping functionality in a set of convenience methods, which sometimes feel like they were created to support the class. However, the library yields some amazing results when it comes to creating deep learning models.

While taking my second run of the course, I plan to get a more practical feel of using it for more of my own experiments and hope to update this section later on with more findings…

Practical things

From my experience so far, the way to get the most out of the course is:

1. Watch the videos in Part 1

The first time around, it is ok not to get all the intricate details. Watch every video and follow along while running the code in your own Jupyter notebook. The main idea is for you to get the high-level concepts. Understand what problems you can solve with the concepts explained in the lesson and gradually work your way through the details. Jeremy will occassionaly give an “assignment” at the end of the lecture, be sure to take him up on that. They are always practical in nature and really give you a feel of what effort is required to do it yourself and present a handy learning opportunity.

2. Start the videos of Part 2

Having worked through Part 1, you should have a good grasp of the basics of deep learning, with some insights few other courses will be able to give you. Now it is time to start Part 2, opening you up to state-of-the-art deep learning papers, techniques and their practical implementations. Just like Part 1, try to follow along in your notebook, run the code and put your mind to understanding the concepts and their applications.

3. Restart the Part 1 videos

In parallel with starting to watch the Part 2 videos, it is now time to restart the Part 1 videos and really get your hands dirty. As you understand all the high level concepts, the best way to move forward is to push yourself to rebuild the notebooks all by yourself. The first time we took the easy road, but in order to truly master the topic, you will benefit most from sweating on actually re-writing the notebook code yourself. From time to time, you could/should also read through the fast.ai source code, which contains a massive amount of information for you to distil.

Remember, fast.ai course is a “do”-course. Watching it, will only get you so far…

4. Restart the Part 2 videos

The same as the previous logic also applies to the Part 2 videos. Try to understand what is being done and push yourself to reproduce the results. If you want to stand on the shoulders of giants, you sometimes have to do the climbing yourself…

5. Challenge yourself to read the papers

A final thing, which can seem a little daunting at first, is to start reading and implementing the papers mentioned in the videos. I have decided to read/study them in order of appearance, not entirely sure if that is the best way, but that is what I am doing it at the moment.

To reassure you, here are some important lessons I already learned from fast.ai concerning AI papers:

- Focus on the essence of what the paper is trying to explain

- Papers are not necessarily written to be understood

- complex math is sometimes introduced to hide simple concepts;

- formulas are written in their entirity instead of reducing complexity;

- the code to reproduce the papers is not necessarily that hard to write, but it takes some effort to learn to translate between Python code and paper math;

- over time, you will learn to read them more rapidly.

- to stay on top in deep learning, reading/understanding/reimplementing papers is part of the work you have to put in.

Future posts

In this post I tried to give you a sense of what the fast.ai course, library and community could mean to you in your deep learning endeavors.

On top of that, I am also planning a series of posts summarizing every video lecture, mostly for reference but it will help me in my studies as well. Here is a preliminary structure I plan on using for every video:

- Summary of the topics covered

- What you are expected to learn from the video

- which notebooks were used

- which links were discussed

- which papers were mentioned

Hope you enjoy!